Assembly and Annotation Collection

This workflow can assemble and annotate multiple genomes in one submission. The results from one submission will be packaged together into a single file. The workflow uses the shovill and Prokka software for assembly and annotation of genomes, respectively, as well as QUAST for assembly quality assessment. The specific Galaxy tools are listed in the table below.

| Tool Name | Owner | Tool Revision | Toolshed Installable Revision | Toolshed |

|---|---|---|---|---|

| bundle_collections | nml | 705ebd286b57 | 2 (2020-08-24) | Galaxy Main Shed |

| shovill | iuc | 8d1af5db538d | 5 (2020-06-28) | Galaxy Main Shed |

| prokka | crs4 | 111884f0d912 | 17 (2020-06-04) | Galaxy Main Shed |

| quast | iuc | ebb0dcdb621a | 8 (2020-02-03) | Galaxy Main Shed |

To install these tools please proceed through the following steps.

Step 1: Galaxy Conda Setup

Galaxy makes use of Conda to automatically install some dependencies for this workflow. Please verify that the version of Galaxy is >= v16.01 and has been setup to use conda (by modifying the appropriate configuration settings, see here for additional details). A method to get this workflow to work with a Galaxy version < v16.01 is available in FAQ/Conda dependencies.

Address shovill related issues

PILON Java/JVM heap allocation issues

PILON is a Java application and may require the JVM heap size to be set (e.g. _JAVA_OPTIONS=-Xmx4g).

Shovill memory issue

The memory allocated to Shovill should, by default, be automatically set by the Galaxy $GALAXY_MEMORY_MB environment variable (see the planemo docs for more information). However, this can be overridden by setting the $SHOVILL_RAM environment variable. One way you can adjust the $SHOVILL_RAM environment variable is via the conda environment. That is, if you find the conda environment containing shovill you can set up files in etc/conda/activate.d and etc/conda/deactivate.d to set environment variables.

cd galaxy/deps/_conda/bin/activate galaxy/deps/_conda/envs/__shovill@1.0.4

mkdir -p etc/conda/activate.d

mkdir -p etc/conda/deactivate.d

echo -e "export _OLD_SHOVILL_RAM=\$SHOVILL_RAM\nexport SHOVILL_RAM=8" >> etc/conda/activate.d/shovill-ram.sh

echo -e "export SHOVILL_RAM=\$_OLD_SHOVILL_RAM" >> etc/conda/activate.d/shovill-ram.sh

Alternatively, you can set environment variables within the Galaxy Job Configuration.

Step 2: Install Galaxy Tools

Please install all the Galaxy tools in the table above by logging into Galaxy, navigating to Admin > Search and browse tool sheds, searching for the appropriate Tool Name and installing the appropriate Toolshed Installable Revision.

The install progress can be checked by monitoring the Galaxy log files galaxy/*.log. On completion you should see a message of Installed next to the tool when going to Admin > Manage installed tool shed repositories.

Note: Prokka downloads several large databases and may take some time to install.

Step 3: Testing Pipeline

A Galaxy workflow and some test data has been included with this documentation to verify that all tools are installed correctly. To test this pipeline, please proceed through the following steps.

- Upload the Assembly Annotation Collection Galaxy Workflow by going to Workflow > Upload or import workflow.

-

Upload the sequence reads by going to Analyze Data and then clicking on the upload files from disk icon

. Select the test/reads files. Make sure to change the Type of each file from Auto-detect to fastqsanger. When uploaded you should see the following in your history.

. Select the test/reads files. Make sure to change the Type of each file from Auto-detect to fastqsanger. When uploaded you should see the following in your history.

-

Construct a dataset collection of the paired-end reads by clicking the Operations on multiple datasets icon

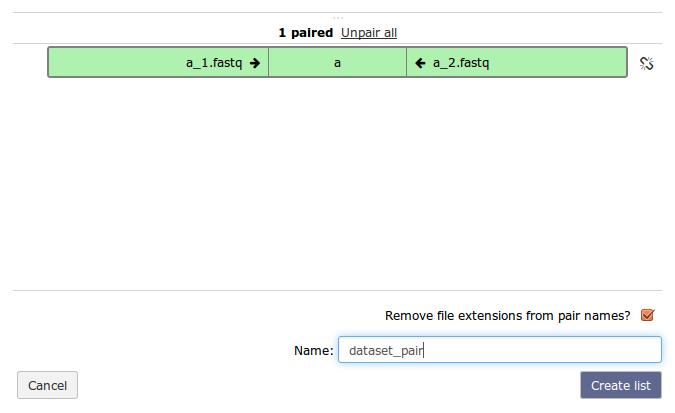

. Please check off the two .fastq files and then go to For all selected… > Build List of dataset pairs. You should see a screen that looks as follows.

. Please check off the two .fastq files and then go to For all selected… > Build List of dataset pairs. You should see a screen that looks as follows.

- This should have properly paired your data and named the sample a. Enter the name of this paired dataset collection at the bottom and click Create list.

- Run the uploaded workflow by clicking on Workflow, clicking on the name of the workflow AssemblyAnnotationCollection-shovill-prokka-paired_reads-v0.5 (imported from uploaded file) and clicking Run. This should auto fill in the dataset collection. At the very bottom of the screen click Run workflow.

-

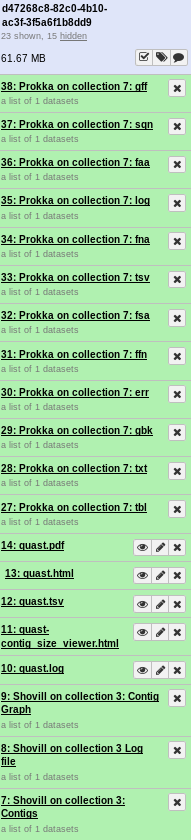

If everything was installed correctly, you should see each of the tools run successfully (turn green). On completion this should look like.

If you see any tool turn red, you can click on the view details icon

for more information.

for more information.

If everything was successful then all dependencies for this pipeline have been properly installed.